Harden PostgreSQL on Kubernetes and CloudNativePG

In this blog post I explained how to set up a highly available PostgreSQL cluster using the CloudNativePG PostgresSQL operator for kubernetes. One thing this post did not really cover was security - especially how to harden PostgreSQL itself to prevent unwanted database access and data breaches.

This post will outline general strategies to harden PostgreSQL and provides a hands-on example for how to implement security best-practices with the CloudNativePG postgres operator on kubernetes.

As this is quite a long post, please find the TLDR in the Summary.

PostgreSQL security hardening best practices

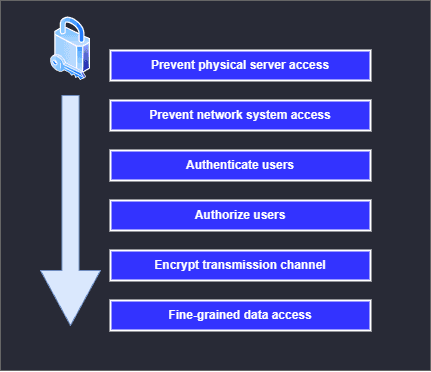

When talking about PostgreSQL security, we can think of it as any ordinary IT system. There are certain levels of security which we need to address:

- Physical and network system access

- Authentication

- Authorization

- Encryption

- Data access

PostgreSQL Security Levels

PostgreSQL Security Levels

Physical and network system access

If potential bad actors gain physical access to a database server, chances are high, that they can compromise data. Nevertheless, in modern cloud environments, we don't have any possibility to control and monitor physical access to our servers - we can only trust our cloud providers to not mess with our data. Therefore I'll not cover this topic here. Instead, I'll focus on how PostgreSQL can be connected to and how to prevent unwanted parties from gaining this network access.

First we need to distinguish the two available connection methods:

- Unix domain sockets (UDS)

- TCP/IP

Unix domain sockets

NOTE: This is kind of a general topic and does not really apply to CloudNativePG - as due to the containerized nature of the cluster you most of the time will not be able to connect from the same host as where your cluster is running.

Unix domain sockets are a way of inter-process communication of processes running on the same operating system. This method of connecting to PostgreSQL is quite fast and is the default way of connecting to a postgres server - if your client runs on the same machine as the server.

Unix-domain sockets use the usual Unix file system permission set. The parameter value is expected to be a numeric mode specified in the format accepted by the chmod and umask system calls. (To use the customary octal format the number must start with a 0 (zero).)

The default permissions are 0777, meaning anyone can connect. Reasonable alternatives are 0770 (only user and group, see also unix_socket_group) and 0700 (only user). (Note that for a Unix-domain socket, only write permission matters, so there is no point in setting or revoking read or execute permissions.)

(see the postgresql connection manual for more details).

This means, to prevent unwanted parties from accessing our server via UDS, we should set this to a non-default value. I recommend setting it to 0700 to only allow the current user (the one running the server) to connect to the database via UDS.

If your application runs always on the same machine as the server, you might consider disabling TCP/IP connectivity at all and only use UDS (as you basically block the whole network attack surface as only the local host applications can access the sever). But as most applications nowadays run on distributed, horizontally scaled infrastructure, it's more likely that most - if not all connections - are coming from different hosts, so keeping permissions on UDS as tight as possible is most of the time your best strategy.

NOTE: For the sake of completeness, you can create multiple unix domain sockets, by using the unix_socket_directories configuration option. However, in cloud environments it's most often not required.

On CloudNativePG, one can set the permissions for the UDS via the unix_socket_permissions setting. Simply add this setting to the postgres.parameters section in your CloudNativePG cluster yaml file:

1 postgresql:2 parameters:3 unix_socket_permissions: 07004 other-settings: "..."

TCP/IP

As with most modern PostgreSQL use cases you will want to connect to a postgres server or cluster from a different location/host - there one needs to use TCP/IP and therefore a networked connection.

The first layer of security here is the networking layer.

-

If possible use VLANS to separate your PostgreSQL server where ever possible

-

Configure the system to only listen on the network interfaces which are to be trusted an need to connect to the server. This can be achieved by setting the parameter

listen_addressesin the PostgreSQL configuration.1 postgresql:2 parameters:3 listen_addresses: "192.168.10.22"4 other-settings: "..."NOTE: Remote TCP/IP connections will not be possible unless the server is started with an appropriate value for the listen_addresses configuration parameter, since the default behavior is to listen for TCP/IP connections only on the local loopback address localhost.

-

A further - and very important measure - is to use firewalls to prevent - first of all who can access the server - and secondly, to prevent access to anything else - other than the PostgreSQL server. If possible, specifically set the IP address of your client. And similarly important, make sure to block any port which is not necessarily to be open. If you have a dedicated PostgreSQL node, it is sufficient to keep Port 5432 open.

In most cloud environments it's best practice to not use the hosts Firewall, but the cloud providers firewall as part of the networking. On Azure for example, make use of the Network Security Groups with their rules. They work similar to a firewall and allow to whitelist source and target addresses as well as ports.

As a general rule of thumb: Limit access to your database server as much as possible.

NOTE: For some applications - eg. when they are not hosted in the same kubernetes cluster as your PostgreSQL server - it might be difficult to whitelist specific IP addresses, as the application might have dynamic IPs. In this case we need to open up our Firewall a little bit and allow connections from a wider range of IP addresses. Below, we will see additional security measures we can take to address this issue.

Authentication

PostgreSQL provides several ways for authenticating users - and it provides a straight-forward way for configuring these options. The following means of authentication are available:

- trust: Simply allow any whitelisted IP-Address to connect. This is only recommended for local connections through unix sockets - or for very strictly manage private networks.

- password: Simple username/password authentication.

- gssapi: We can use GSSAPI to authenticate users.

- sspi: We might use SSPI to authenticate users.

- operating system username: This authentication method obtains the operating system user name of the client and checks if it matches the requested database username.

- ldap: Authenticate via well-known LDAP.

- cert: Authenticate using client certificates.

As you can see, a lot of convenient ways for authenticating a user. PostgreSQL allows to configure the authentication mode per user - providing fine grained access control. The way we can implement this is using the pg_hba.conf configuration file.

pg_hba.conf

This configuration file allows to set which users are allowed to connect to our server - using which specific authentication mechanism.

The file itself is located in the data directory of your PostgreSQL server (default /var/lib/postgresql/<postgres-version>/data).

The structure of the file is rather simple: Each line in the file corresponds to a user/database configuration. And the lines themselves are built like this:

1<connection-method> <database> <user-1> <address> <auth-method> <auth-options>2<connection-method> <database> <user-2> <address> <auth-method> <auth-options>3<connection-method> <database-2> <user-2> <address> <auth-method> <auth-options>

The file is processed from top to bottom. So the first line which meets all criterions will be used to connect a user.

(All the description below are either copied or cited from postgres manual )

connection-method: One of the following:

- local: This record matches connection attempts using Unix-domain sockets. Without a record of this type, Unix-domain socket connections are disallowed.

- host: Matches connection attempts made using TCP/IP.

hostrecords match SSL or non-SSL connection attempts as well as GSSAPI encrypted or non-GSSAPI encrypted connection attempts. Keep in mind, that pg_hba is processed from top to bottom. So if you have a host rule for a specific user - hostssl: Matches connection attempts made using TCP/IP, but only when the connection is made with SSL encryption.

- hostnossl: opposite behavior of hostssl; it only matches connection attempts made over TCP/IP that do not use SSL. Use this setting in combination with

reject(see below) very early in the pg_hba.conf file to catch reject all connections without SSL. - hostgssenc: This record matches connection attempts made using TCP/IP when the connection is made with GSSAPI encryption.

- hostnogssenc: has the opposite behavior of hostgssenc; it only matches connection attempts made over TCP/IP that do not use GSSAPI encryption. Again, be careful with this rule, as it catches ALL TCP/IP connections which do NOT use GSSAPI.

database: Define the database for which this user should be able to connect. Use the name of the database to specify a specific database. You can also specify multiple databases by separating their names with a comma. You can also specify your databases in an external file - again comma separated. The file must be in the same directory as the pg_hba.conf. Simply use @<filename> as database config option, to reference this external file.

Alternatively, you have the following special options:

- all: Match all databases

- sameuser: Matches databases which name are the same as the user which wants to connect

- samerole: Specifies, that the requested user must be a member of the role with the same name as the requested database

- replication: Specifies that the record matches if a physical replication connection is requested. This is only valid for physical replication!

user: Specify the username which the current rule is meant for. Multiple users can be supplied by separating them with commas. An interesting possibility again is to use a separate file which contains the usernames for this rule. The file needs to be located in the same dir as pg_hba.conf and contain the usernames in a comma-separated manner.

address: Define which client machine address or addresses this record matches. Can either be a host name, an IP address range or one of the following special keywords.

- IP-Address-ranges are specified in CIDR range notation

- all: Match any IP address. While this is the least secure options of all, sometimes (eg. if you host your client application on a managed hosting service with very dynamic IP addresses), it's not possible to pinpoint a specific range. Use

allin such a case. - samehost: Only match any of the servers own IP addresses

- samenet: Match any address in any subnet that the server is directly connected to. This is an interesting option for installations in company networks or private networks.

- Host names starting with a dot (

.) matches a suffix of the actual host name. So .example.com would match foo.example.com (but not just example.com).

auth-method: Specifies the authentication method to use when a connection matches this record. As this section is the most complex of all the authentication sections, I'll provide a quick summary of the most needed information, but I want to refer to the very good documentation in the PostgreSQL manual about this topic. The following options are possible:

- trust: Allow the connection unconditionally. This method allows anyone that can connect to the PostgreSQL database server to login as any PostgreSQL user they wish, without the need for a password or any other authentication. Only use this option for

localconnections or heavily controlled network environments. - reject: Reject the connection unconditionally. This is useful for “filtering out” certain users, hosts or connection methods. I like to put a

rejectrule rather at the top ofpg_hba.confwhich catcheshostnosslusers - meaning that all connections without ssl are rejected. - scram-sha-256: Perform SCRAM-SHA-256 authentication to verify the user's password. Please note that your PostgreSQL server needs to be configured to use scram-sha-256 encryption to store the users password. This is the default since PostgreSQL 13. See the postgres manual for more details.

- md5: Perform SCRAM-SHA-256 authentication to verify the user's password. The same applies as for

scram-sha-256. - password: Require the client to supply an unencrypted password for authentication. Since the password is sent in clear text over the network, this should not be used on untrusted networks

- gss: Use GSSAPI to authenticate the user

- sspi: Use SSPI to authenticate the user. This is only available on Windows.

- ident: Obtain the operating system user name of the client by contacting the ident server on the client and check if it matches the requested database user name

- peer: Obtain the client's operating system user name from the operating system and check if it matches the requested database user name.

- ldap: Authenticate using an LDAP server

- radius: Authenticate using a RADIUS server

- cert: Authenticate using SSL client certificates

- pam: Authenticate using the Pluggable Authentication Modules (PAM) service provided by the operating system

- bsd: Authenticate using the BSD Authentication service provided by the operating system

auth-options: Auth-options are optional. They are mostly depending on the chosen authentication method. Look up the individual options for your authentication method in the manual. Nevertheless, there is one very important option which is independent of the method used: clientcert. It can be specified for any records of type hostssl. This option can be set to verify-ca or verify-full. Both options require the client to present a valid (trusted) SSL certificate, while verify-full additionally enforces that the cn (Common Name) in the certificate matches the username or an applicable mapping. This setting is actually very similar to the authentication-method cert. But it allows to combine eg. scram-sha-256 (encrypted password) authentication with client certificates.

Two interesting points are worth noting here:

- A user which is not described in pg_hba.conf will not be able to connect. Not even the

postgressuper-user. - Since the file is processed from top to bottom. So the first line which meets all criterions will be used to connect a user. Typically, earlier records will have tight connection match parameters and weaker authentication methods, while later records will have looser match parameters and stronger authentication methods. For example, one might wish to use trust authentication for local TCP/IP connections but require a password for remote TCP/IP connections. In this case a record specifying trust authentication for connections from 127.0.0.1 would appear before a record specifying password authentication for a wider range of allowed client IP addresses.

An example pg_hba.conf is provided below:

1# Grant local access2local all all peer map=local34# Require client certificate authentication for streaming_replica user5hostssl postgres streaming_replica all cert6hostssl replication streaming_replica all cert7hostssl all cnpg_pooler_pgbouncer all cert89# Reject all non-ssl users10hostnossl all all 0.0.0.0/0 reject1112# Require the postgres user to present an encrypted password as well as a client certificate13hostssl all postgres 0.0.0.0/0 scram-sha-256 clientcert=verify-full14# Require the user secret_share_app_rw_user to present an encrypted password - and only allow to connect to the database secret_share_app_db15hostssl secret_share_app_db secret_share_app_rw_user 0.0.0.0/0 scram-sha-256

In general, all records can not only be granted to individual users but also roles. So it is best practice and highly recommended to create "group"-roles, assign these roles to users and then assign the privileges to the roles rather then the individual users, as this might get tedious very quickly.

1CREATE ROLE sales_team;2GRANT sales_team to user_andy;

CloudNativePG

When running a cluster with CloudNativePG there is one important thing to note: The cluster controller will automatically add a line host all all all scram-sha-256 at the very bottom of pg_hba.conf. This is done as a fallback, but requires you to catch any unwanted connection before this file. As we most probably don't want to allow to connect any user to any database by providing a password, we need to add a reject-rule before that. My go-to strategy here is to add hostnossl all all 0.0.0.0/0 reject to the cluster pg_hba.conf of the cluster-yaml.

The pg_hba.conf section in the cluster manifest can be defined as sub-object of postgres:.

1 postgresql:2 parameters:3 ...some other parameters4 pg_hba:5 - hostnossl all all 0.0.0.0/0 reject6 - hostssl all postgres 0.0.0.0/0 scram-sha-256 clientcert=verify-full

These settings result in the example pg_hba.conf provided above. As already mentioned, CloudNativePG automatically adds a fallback at the very bottom, but also adds records for the streaming_replica user.

Client certificates

For the authentication option cert as well as for the auth-option clientcert=verify-full, the client needs to authenticate using valid client certificates. The server needs to know and accept these certificates.

CloudNativePG provides a handy utilities to create the client certificates for any user or role. By using the cnpg kubectl plugin, we can run the following command, to create client certificates for our users:

1kubectl cnpg certificate -n <cluster-namespace> <name-of-new-cert> --cnpg-cluster <cnpg-cluster-name> --cnpg-user <postgres-user>

- cluster-namespace: Namespace where the CloudNativePG cluster is running

- name-of-new-cert: Kubernetes secret name - choose your desired name here

- cnpg-cluster-name: Name of the CloudNativePG cluster

- postgres-user: User for which you want to create the client certificate

After invoking the command, CloudNativePG will create a kubernetes secret which will serve as client certificate.

(If you need to manually create the client certificates, have a look in the postgres docs)

After successfully creating the certificates, we can utilize them rather easily, if our clients run in the same k8s cluster as our CloudNativePG Postgres cluster. We can simply mount the kubernetes secrets as volume mounts within our pod specification. The following example (shamelessly stolen from cloudnative's docs) demonstrates the usage of these secrets:

1apiVersion: apps/v12kind: Deployment3metadata:4 name: cert-test5spec:6 replicas: 17 selector:8 matchLabels:9 app: webtest10 template:11 metadata:12 labels:13 app: webtest14 spec:15 containers:16 - image: ghcr.io/cloudnative-pg/webtest:1.6.017 name: cert-test18 volumeMounts:19 - name: secret-volume-root-ca20 mountPath: /etc/secrets/ca21 - name: secret-volume-app22 mountPath: /etc/secrets/app23 ports:24 - containerPort: 808025 env:26 - name: DATABASE_URL27 value: >28 sslkey=/etc/secrets/app/tls.key29 sslcert=/etc/secrets/app/tls.crt30 sslrootcert=/etc/secrets/ca/ca.crt31 host=cluster-example-rw.default.svc32 dbname=app33 user=app34 sslmode=verify-full35 - name: SQL_QUERY36 value: SELECT 137 readinessProbe:38 httpGet:39 port: 808040 path: /tx41 volumes:42 - name: secret-volume-root-ca43 secret:44 secretName: cluster-example-ca45 defaultMode: 060046 - name: secret-volume-app47 secret:48 secretName: cluster-app49 defaultMode: 0600

If you can't use these certificates from within the same k8s cluster, you might download them as follows:

- To get the servers CA:

kubectl get secret <cnpg-cluster-name>-ca -n <cluster-namespace> -o json | jq -r '.data."ca.crt"' | base64 -d > certs/sslrootcert.crt. This will output your server CA tocerts/sslrootcert.crt. - To get the client certificate (tls.crt):

kubectl get secret <name-of-k8s-secret> -n <cluster-namespace> -o json | jq -r '.data."tls.crt"' | base64 -d > certs/tls.crt. This will output your client certificate tocerts/tls.crt. - To get the client key (tls.key):

kubectl get secret <name-of-k8s-secret> -n <cluster-namespace> -o json | jq -r '.data."tls.key"' | base64 -d > certs/tls.crt. This will output your client certificate tocerts/tls.crt.

IMPORTANT: The client certificates are automatically renewed every 3 months. When using them within the same cluster by utilizing volume mounts as demonstrated above - nothing to worry about. However, when manually exporting the certificates, you need to re-export them after renewal. This is the reason why for applications running outside your k8s cluster, you most probably can't utilize certificate authorization in meaningful ways. There it's the best option to require encrypted password-authentication and only allow the minimum set of operations (see next chapter).

Authorization

Additionally to authenticating users, we can define what privileges certain users are granted to. When a PostgreSQL object - like a table - is created, it is assigned an owner. The owner is normally the user that executed the creation statement. For most kinds of objects, the initial state is that only the owner can do anything with the object. To allow other roles to use it, privileges must be granted. SELECT, INSERT, UPDATE, DELETE, TRUNCATE, REFERENCES, TRIGGER, CREATE, CONNECT, TEMPORARY, EXECUTE, USAGE, SET and ALTER SYSTEM are the available privileges. The privileges applicable to a particular object vary depending on the object's type (table, function, etc.).

To change the owner of a table, you might run:

1ALTER TABLE table_name OWNER TO new_owner;

Change the "TABLE" keyword to whatever object you want to change the owner for.

To grant a specific privileges to an object, run:

1GRANT UPDATE ON <table-or-view> TO <user>;

Change "UPDATE" to the privilege you want to grant. Please note, that most of the time a user needs several privileges to being able to execute a specific action. For example for most UPDATE operations one also needs SELECT privileges.

NOTE: By default, non-owner users can not connect to a database. Therefore, granting the privilege

CONNECTis vital for any user to even being able to connect to the database.

In general, all the privileges can not only be granted to individual users but also roles (well... basically users and roles are identical in postgres but that's a topic for another time). So it is best practice and highly recommended to create "group"-roles, assign these roles to users and then assign the privileges to the roles rather then the individual users, as this might get tedious very quickly.

Encryption

As with any modern data flows, it's almost always preferable to encrypt data - at least during transport - and sometimes even in rest.

First of all, to enable tls encrypted transport, set the setting ssl in postgresql.conf to on. Note, the the default here is off.

For CloudNativePG, the cluster controller automatically sets this option to on - so nothing to do there.

Secondly, you need to create and provide the server certificates to encrypt the transport channel. Again, CloudNativePG does all the work for us and automatically creates kubernetes secrets for this job - and therefore is able to encrypt the transport channel out of the box. For more details about how one would manually create these certs, have a look in the corresponding PostgreSQL manual page.

One step further, you might also want to encrypt the data at rest. PostgreSQL does not provide meaningful solutions there. The recommended approach is to encrypt your data on the filesystem or disk level. Utilize any of the available tools to encrypt the data on your PostgreSQL filesystem. With major cloud providers this is mostly only switching a button in the settings of your disks. Also, any modern operating system - Windows, Linux, MacOS - provides filesystem encryption tools out of the box. Make use of them to encrypt your data at rest.

PERSONAL OPINION: There is actually not much reason to NOT use filesystem encryption with any storage nowadays. It's easy, reasonably fast and provides good protection against different attack vectors!

Data Access control

All the measures above are more or less in place to prevent general access to database objects and data. PostgreSQL however provides even more granularity for accessing data - Row Level Security - short RLS. They restrict, on a per-user basis, which rows can be returned by normal queries or inserted, updated, or deleted by data modification commands. If RLS is enabled, all data access needs to be defined and allowed with a Row Security Policy. If no policy exists for the table, a default-deny policy is used, meaning that no rows are visible or can be modified (The owner or superuser can still query the data - but not normal users).

To specify which rows are visible or modifiable according to a policy, an expression is required that returns a Boolean result. This expression will be evaluated for each row prior to any conditions or functions coming from the user's query.

The general syntax of creating Row Security Policies is:

1CREATE POLICY <policy-name> ON <table-name> TO <user-or-role> FOR <SELECT-or-INSERT-or-UPDATE-or-DELETE>2USING (<statement-with-boolean-result>)3WITH CHECK (<statement-with-boolean-result>)

- The

TO <user-or-role>clause can be omitted - then the policy applies to any user and role - The

FOR <SELECT-or-INSERT-or-UPDATE-or-DELETE>can be omitted as well - applying the policy to allSELECT,INSERT,UPDATEandDELETE - Existing table rows are checked against the expression specified in USING, while new rows that would be created via INSERT or UPDATE are checked against the expression specified in WITH CHECK. So, the expression in the

USINGsection will be added as aWHEREclause to your existing data - for example duringSELECT. TheWITH CHECKstatement will be used to check your "incoming" data - only if they meet this condition will they be used forINSERTorUPDATE. - You can have both,

USINGandWITH CHECK.

Let's see the following example:

-

Enable RLS on a table by running:

ALTER TABLE my_table ENABLE ROW LEVEL SECURITY; -

Create the Row Security Policy by running:

1CREATE POLICY my_policy ON my_table TO my_role FOR SELECT2USING (tenant = "tenant1");This policy would only allow

my_roleto query data where the columntenantequals "tenant1".Note that you can also add more complex statements here. For example:

1USING (tenant = (SELECT tenant from mapping_table where user = current_user) )In this example we get the tenant information from a mapping table. The term

current_useris a Postgres system information, providing us the name of the executing user.

RLS is a highly suggested way of making sure that your application users can really only read the data they should be able to read. Furthermore, its a great way of reducing logic from your application. Instead of implementing complex checks in your application about which user might read which data, you might transfer this logic to the database layer - and get the peace of mind that PostgreSQL RLS are battle-tested data security mechanisms

Summary

This post strives to give an overview about the many layers of PostgreSQL security:

- Physical and network system access

- Authentication

- Authorization

- Encryption

- Data access

While there are indeed many settings to make, CloudNativePG again helps us tremendously by first of all applying secure defaults and providing snippets and utilities to make our security life easy.

To harden a CloudNativePG cluster, the suggested steps are:

-

Prevent any means of unwanted physical access to your server

-

Use Firewalls to prevent any unwanted network access to your kubernetes nodes. If you use a managed kubernetes service, use Network Policies to achieve the same

-

Use the pg_hba mechanism to control who can connect to your database. Please pay attention, that CloudNativePG will automatically add an

host all all all scram-sha-256rule at the end of the file - as sort of a fallback.1 postgresql:2 parameters:3 ...some other parameters4 pg_hba:5 - hostnossl all all 0.0.0.0/0 reject6 - hostssl all postgres 0.0.0.0/0 scram-sha-256 clientcert=verify-full -

Use the

GRANTSQL command to grant access to specific database objects. Use this as careful as possible - always try to offer the least amount of privileges as possible. -

Only use

hostsslrules inpg_hba.confto enforce TLS transport encryption. CloudNativePG automatically enables SSL on the server. -

Use filesystem encryption to encrypt your data at rest.

-

Whenever possible, use client certificates to authenticate your client. Consider using both, password and certificate authentication. This can be done by adding a

pg_hba.confrule as follows:hostssl all postgres 0.0.0.0/0 scram-sha-256 clientcert=verify-full.With CloudNativePG you create client certificates by utilizing their handy cnpg kubectl plugin:

1kubectl cnpg certificate -n <cluster-namespace> <name-of-new-cert> --cnpg-cluster <cnpg-cluster-name> --cnpg-user <postgres-user>These kubernetes secrets are then automatically renewed!

-

Use Row Level Security for fine-grained control who can read which rows in specific tables.

1CREATE POLICY <policy-name> ON <table-name> TO <user-or-role> FOR <SELECT-or-INSERT-or-UPDATE-or-DELETE>2USING (<statement-with-boolean-result>)3WITH CHECK (<statement-with-boolean-result>)

------------------

Interested in how to train your very own Large Language Model?

We prepared a well-researched guide for how to use the latest advancements in Open Source technology to fine-tune your own LLM. This has many advantages like:

- Cost control

- Data privacy

- Excellent performance - adjusted specifically for your intended use